Why leading employment services and software providers are betting on ontologies.

Algorithms are out, datasets are in. Perhaps one of the crucial findings in data science today is that datasets – not algorithms – might be the key limiting factor to developing human-level artificial intelligence. This contention is especially true in the case of solutions for the labor and recruitment market. Many companies in the recruitment market and public employment services are taking notice and are investing in the ontology-based solutions of JANZZ.technology.

Therefore, we have taken a brief moment to lay out the underlying reasons why datasets have become so important. And what qualities these datasets must have in order for businesses to take full advantage.

Over the past years, machine learning as well as deep learning and evidence based systems have achieved remarkable breakthroughs. These have in turn, driven performance improvements across AI components. Perhaps the two branches of machine learning that have contributed most to this are deep learning, particularly in perception tasks, and reinforcement learning, especially in decision making. Interestingly, these advancements have arguably been driven mostly by the exponential growth of high-quality annotated dataset, rather than algorithms. And the results are staggering: continuously better performance in increasingly complex tasks at often super-human levels.

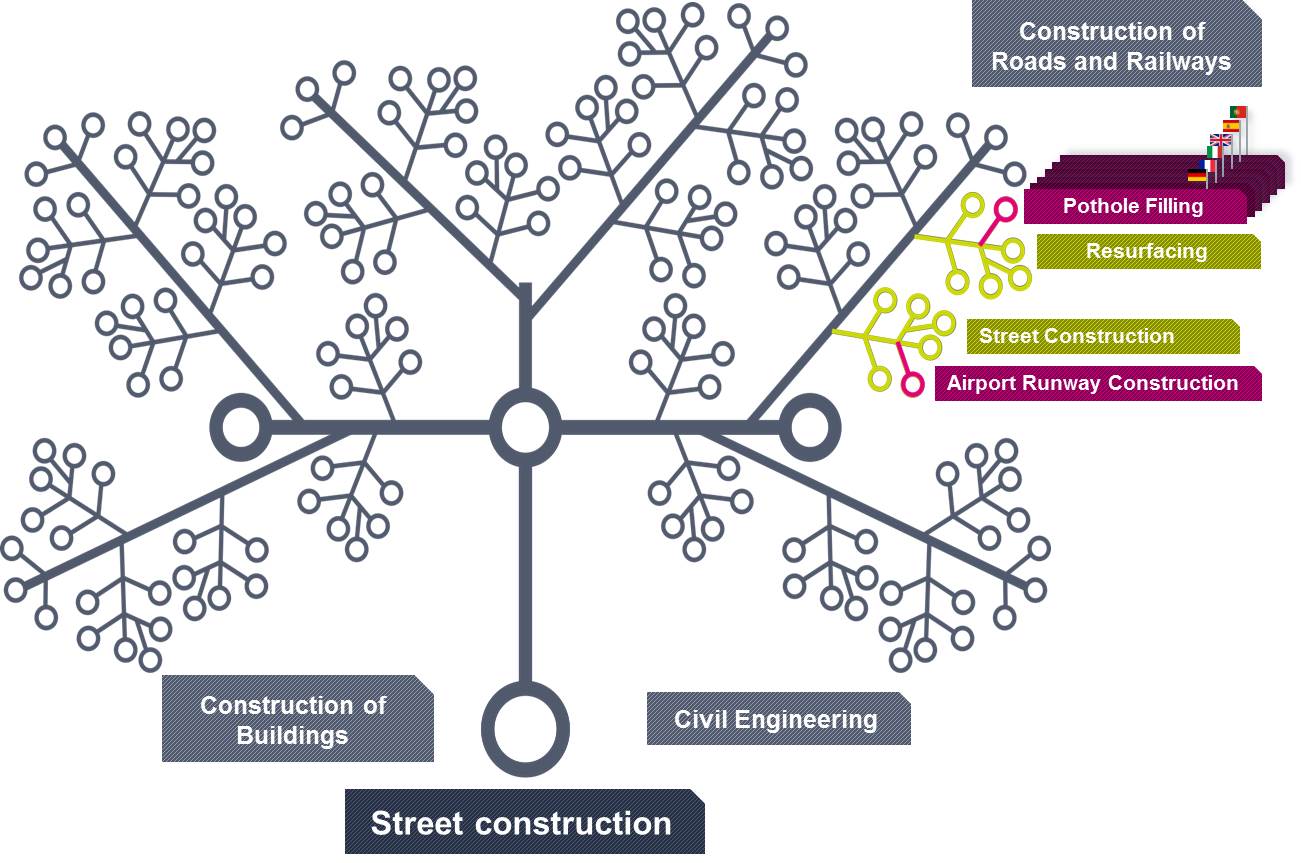

Machine learning thrives on patterns. Unfortunately, our world is full of an almost limitless number of outliers. The labor market in particular is intrinsically a very tough market for automated solutions. Neither job titles, nor skills or educations are in any way standardized across the world or even in a particular country. There is a lot of company, culture and geography specific language involved in the description of jobs and qualifications. Furthermore, implicit phrases like “relevant education” or “relevant experience” are all too common, making job descriptions hard to decipher for machines. Algorithms – even sophisticated ones – have a hard time dealing with such an amount of heterogeneity, implicitness and inconsistency. When one adds the factor or language, it gets even more complicated. Currently, most algorithms struggle to deal with any languages other than English.

Datasets on the other hand, in particular annotated datasets of high quality, can reflect and understand the full bandwidth of the labor market vocabulary. They can also deal with cultural and geographical particularities. However, not all datasets are annotated and of a high quality. Most datasets that companies or public employment services have at their disposal are legacies of the past and therefore often messy, incomplete or inconsistent. Nevertheless, they want to leverage the power of data to improve their applications. Therefore, they need a way to enhance their data with standardized, intelligent meta-data.

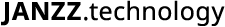

This is where ontologies come into play. An ontology formally represents knowledge as a hierarchy of concepts within a domain. Concepts are linked to each other through different relations. Through the relations that have been set and the location of the term in the ontology the meaning of a specific term becomes interpretable for a machine. An ontology is a dataset but it is of such high quality that it can also help improve the quality of other datasets.

JANZZ.technology focuses solely on the labor market and its ontology is the largest multilingual encyclopedic knowledge database in the area of occupation data, in particular jobs, job classifications, hard and soft skills and qualifications. The occupation and skills ontology can help companies and public employment services in many respects. It can serve as the basis of matching engines, parsing tools, natural language processing or classification tools, improving the results and learning of these tools significantly. More specifically, it can enhance job and candidate matching processes, CV parsing, benchmarking, statistical analyses and much more.

Positive that high data quality is going to create a competitive advantage for them, many stakeholders in the global labor market are currently investing in the solutions offered by JANZZ.technology. Above all its ontology. Data quality is becoming a focal point of competition in the digital labor and recruitment market.